Hi!I am currently in my first year of pursuing a doctoral degree at University of Electronic Science and Technology of China. My advisor is Shuhang Gu. I received the B.S. degree from the Artificial Intelligence School, Xidian University in 2023.

My previous research interest is low-level vision, such as image/video restoration, image enhancement and so on. My current research interest lies in image or video generation. However, what remains unchanged is that I have always been excited about how to build more efficient models, including training and inference. In addition, I believe that this will ultimately impact the development of the AI community.

If you have any suggestions for cooperation in the low-level vision or image/video generation, please feel free to contact me.

🔥 News

- 2025.06: 🎉🎉 Our work “Consistency Trajectory Matching for One-Step Generative Super-Resolution”(CTMSR) is accepted to ICCV 2025.

- 2025.02: 🎉🎉 Our work “Progressive Focused Transformer for Single Image Super-Resolution”(PFT-SR) is accepted to CVPR 2025.

- 2025.02: 🎉🎉 Our work “Learned Image Compression with Dictionary-based Entropy Model”(DCAE) is accepted to CVPR 2025.

- 2024.03: 🎉🎉 Our work “Video Super-Resolution Transformer with Masked Inter&Intra-Frame Attention”(MIA-VSR) is accepted to CVPR 2024.

- 2024.03: 🎉🎉 Our work “Improved Implicit Neural Representation with Fourier Reparameterized Training”(FR-INR) is accepted to CVPR 2024.

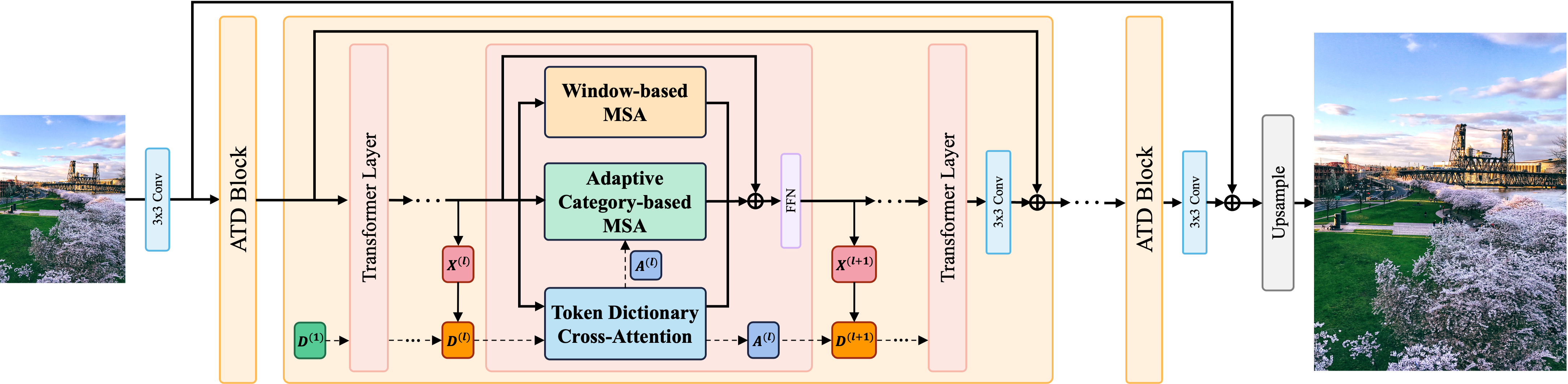

- 2024.03: 🎉🎉 Our work “Transcending the Limit of Local Window: Advanced Super-Resolution Transformer with Adaptive Token Dictionary”(ATD-SR) is accepted to CVPR 2024.

👀 Arxiv

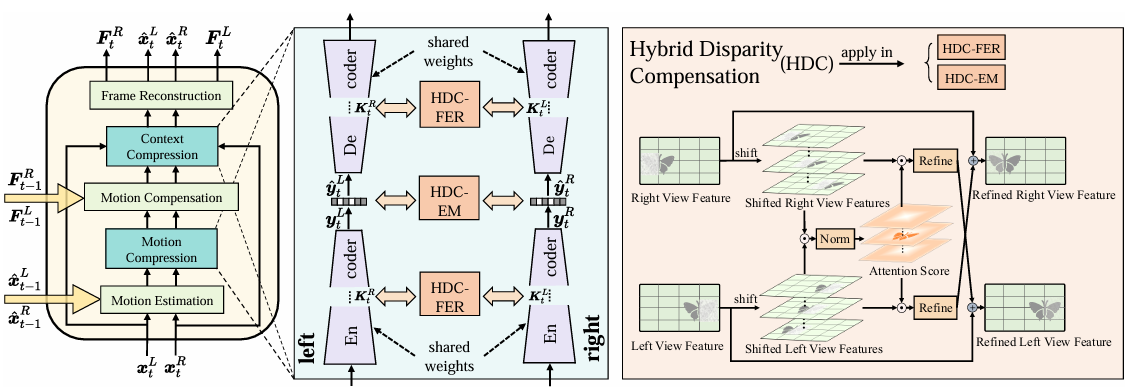

Xingyu Zhou, Wei Long, Jingbo Lu, Shiyin Jiang, Weiyi You, Haifeng Wu, Shuhang Gu

Neural Stereo Video Compression with Hybrid Disparity Compensation

Shiyin Jiang, Zhenghao Chen, Minghao Han, Xingyu Zhou, Leheng Zhang, Shuhang Gu

📝 Publications

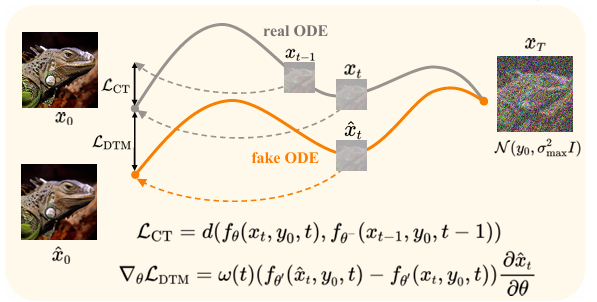

Consistency Trajectory Matching for One-Step Generative Super-Resolution

Weiyi You, Mingyang Zhang, Leheng Zhang, Xingyu Zhou, Kexuan Shi, Shuhang Gu

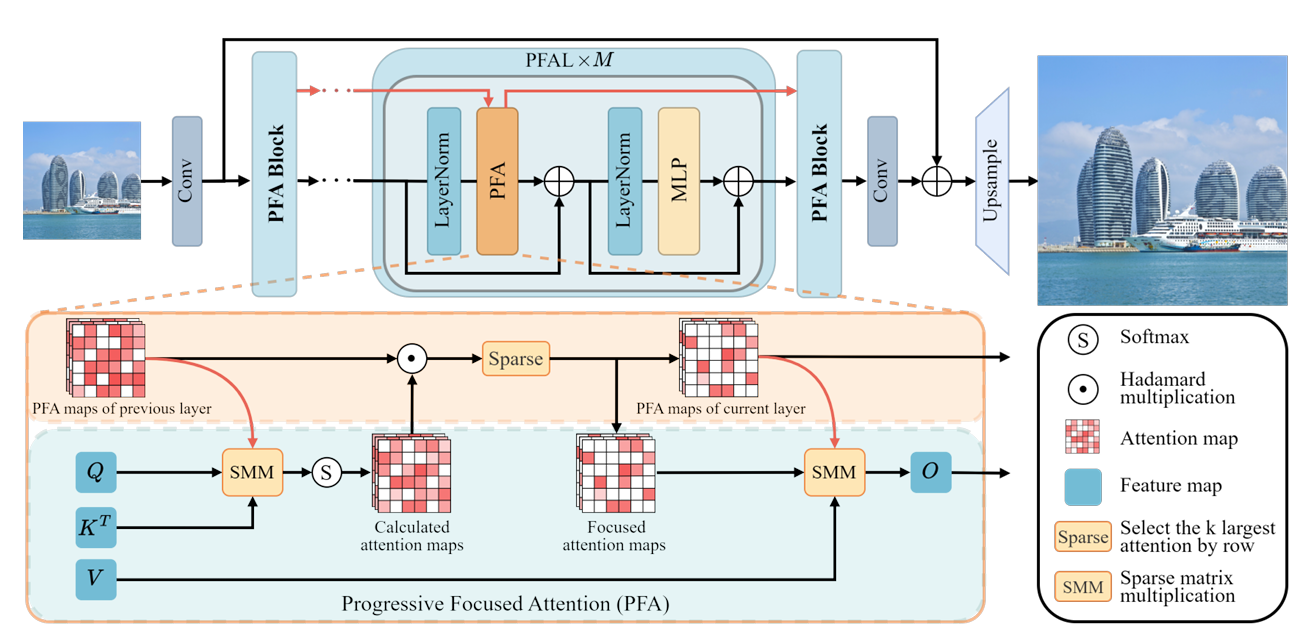

Progressive Focused Transformer for Single Image Super-Resolution

Wei Long, Xingyu Zhou, Leheng Zhang, Shuhang Gu

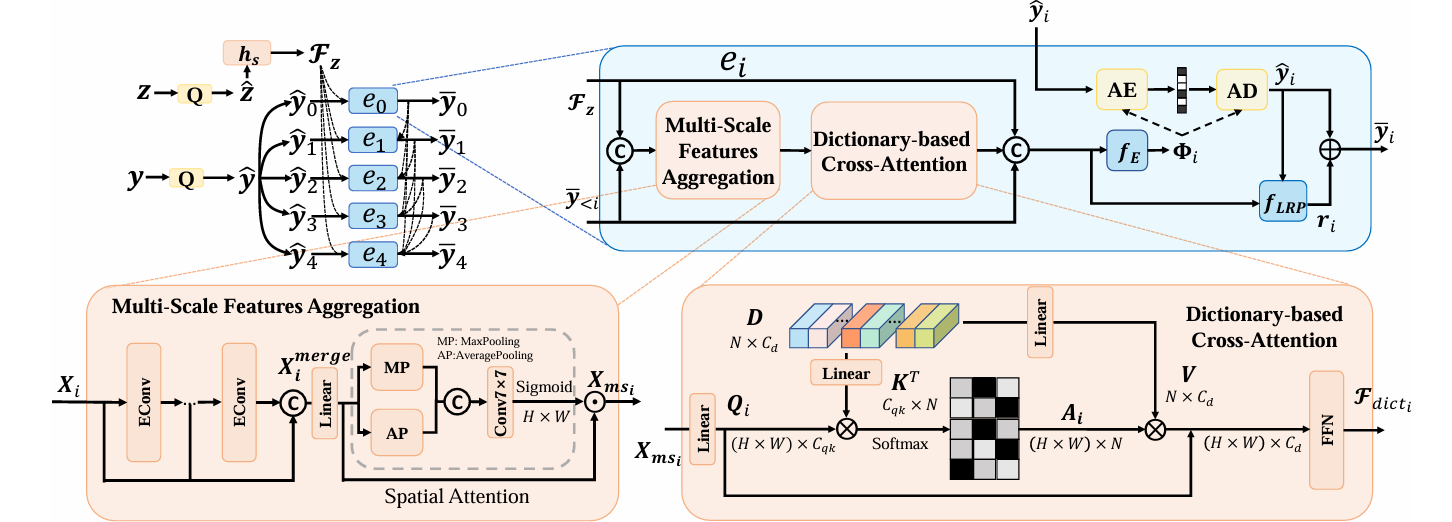

Learned Image Compression with Dictionary-based Entropy Model

Jingbo Lu, Leheng Zhang, Xingyu Zhou, Mu Li, Wen Li, Shuhang Gu

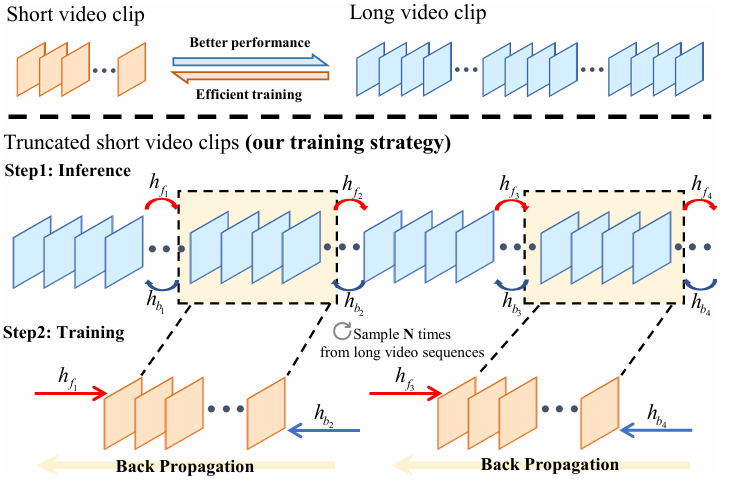

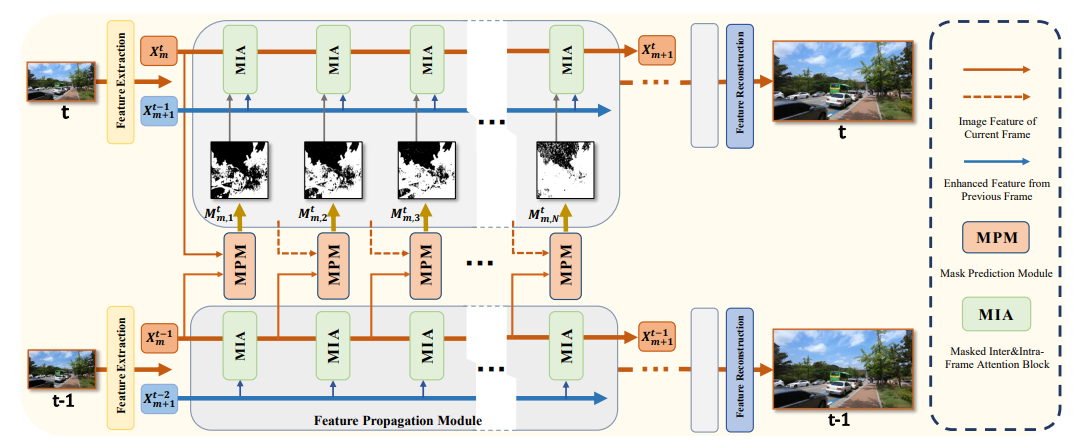

Video Super-Resolution Transformer with Masked Inter&Intra-Frame Attention

Xingyu Zhou, Leheng Zhang, Xiaorui Zhao, Keze Wang, Leida Li, Shuhang Gu

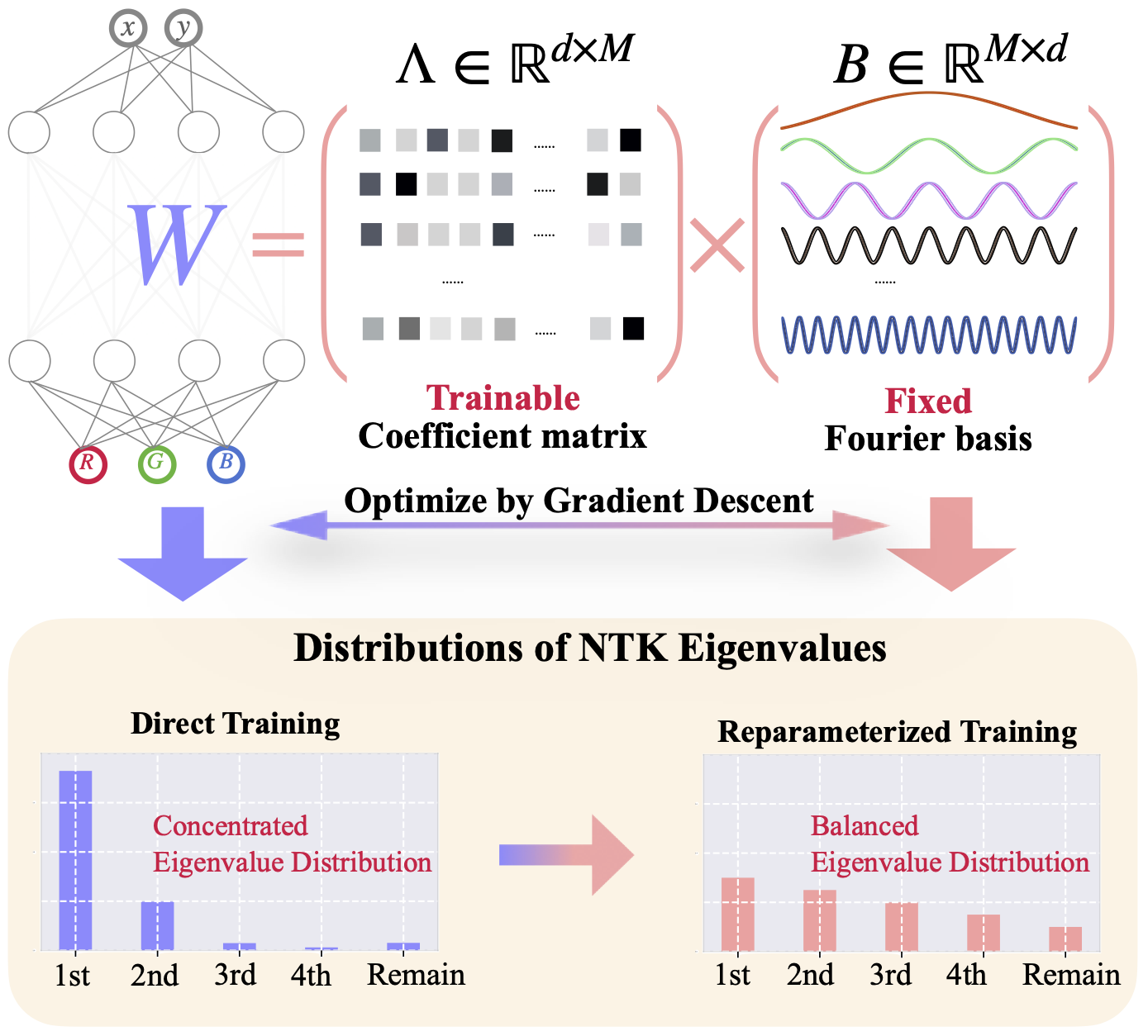

Improved Implicit Neural Representation with Fourier Reparameterized Training

Kexuan Shi, Xingyu Zhou, Shuhang Gu

Leheng Zhang, Yawei Li, Xingyu Zhou, Xiaorui Zhao, Shuhang Gu